2024 Update: Table’s features and capabilities are now integrated into AppSheet. Read more here.

In this post, I’ll show you how to use the new Google Tables Apps Script Bot.

When something happens in your Google Table – a new row, or a value changes – a bot can be set to trigger an Apps Script function.

It opens up a world of new possibilities.

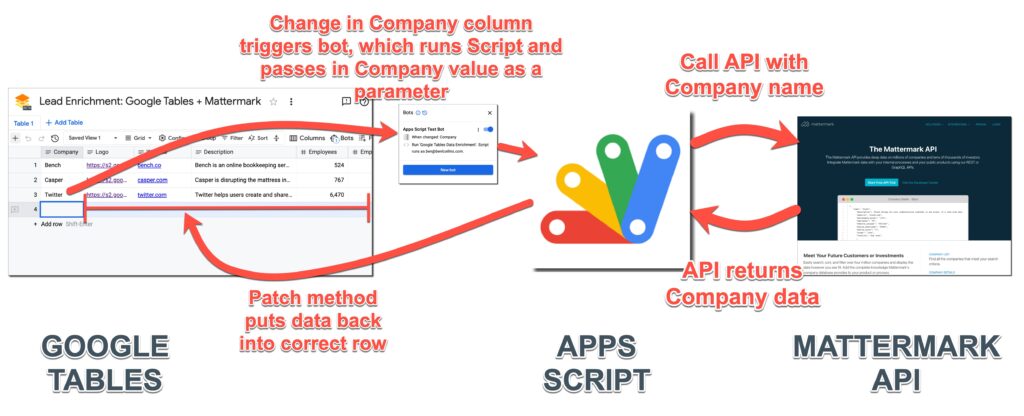

In this post, I’ll show you how to automatically enrich leads in Google Tables by retrieving data via the Mattermark API.

When I add a new company name into the first column, the new Google Tables Apps Script bot is triggered. The company name is passed to the Mattermark API and the relevant data is returned and finally added to that row in Google Tables.

Google Tables Apps Script Bot

Bots in Google Tables are automated actions that you do tasks for you, without needing to write any code.

Bots have three components:

- Firstly, a trigger, which is a change in your Google Table that fires the bot

- Secondly, any specific conditions that control the behavior of the bot, for example which rows it operates on

- And thirdly, the action, i.e. what the bot does

It’s this third option where we can choose to run an Apps Script file as the bot action.

The Google Tables documentation gives more background on executing Apps Script with bots.

Google Tables Apps Script Bot Example: Data Enrichment

In this example, we’ll use data from the Mattermark API to enrich company data in our Google Table.

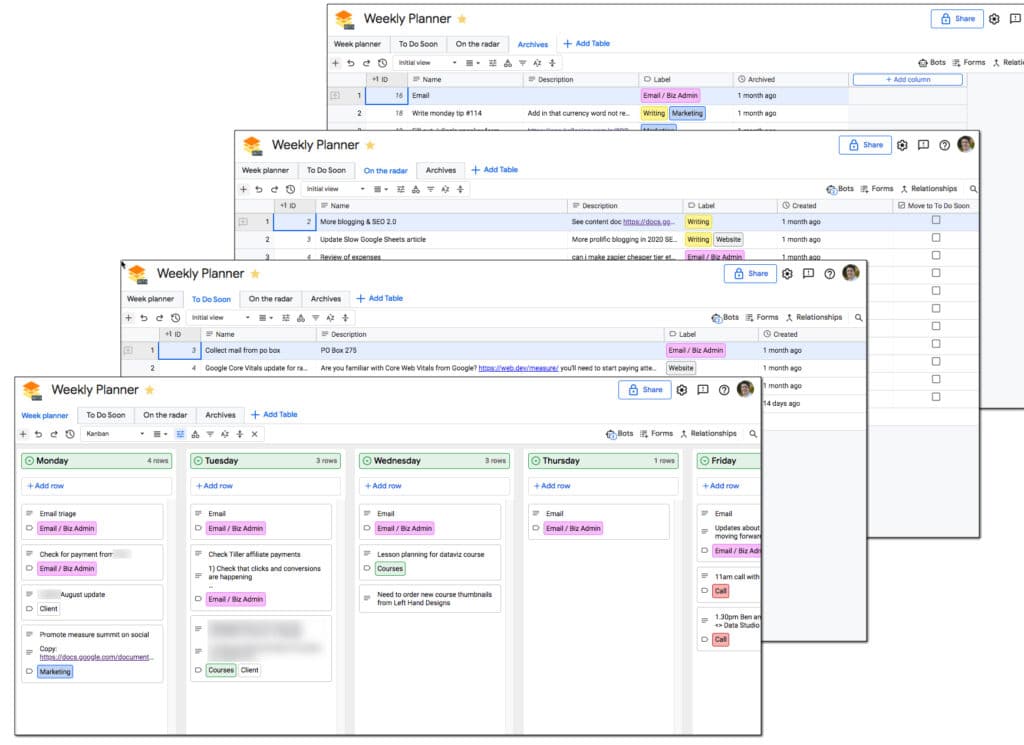

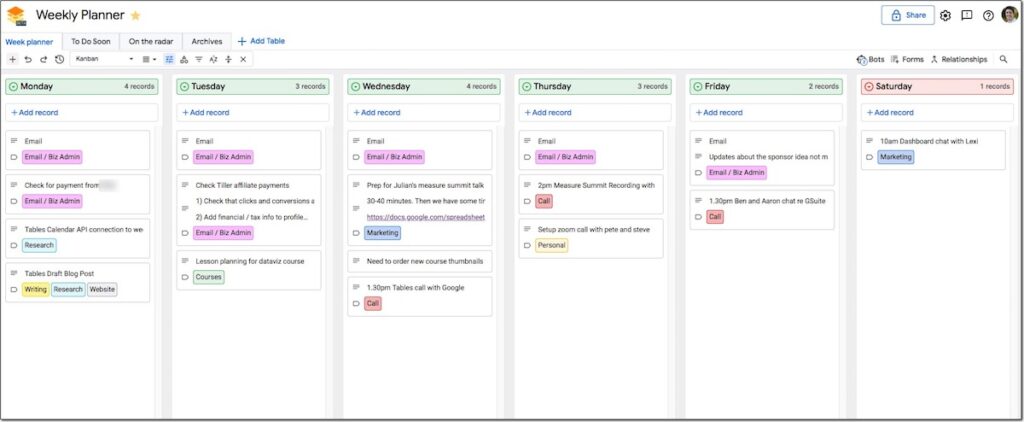

This is what the tool looks like in Google Tables:

When you type the company name, e.g. “Casper”, into the first column, the Google Tables Apps Script bot is triggered. This runs the Apps Script file and passes in the Company name as a parameter.

After that, the script file calls the Mattermark API with this company name and retrieves additional data for this company.

Finally, the script file adds this data back into the correct row of Google Tables using the PATCH method.

And here’s a flow chart of the overall workflow (click to enlarge):

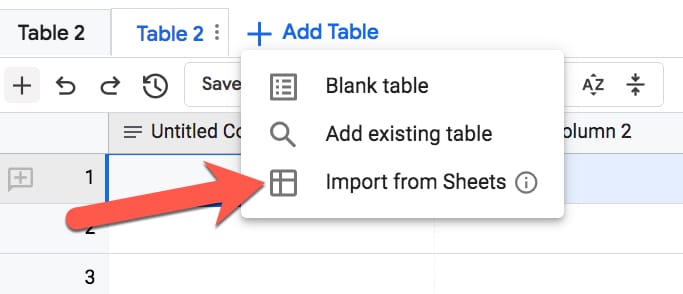

Google Tables Set Up

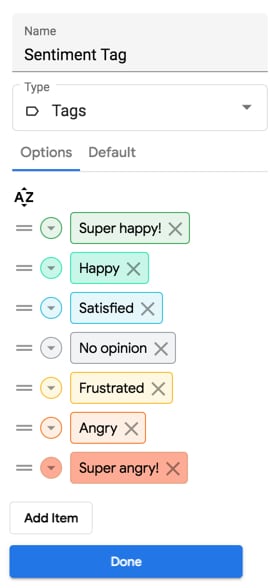

The first step is to create a new Google Table with the following columns:

| Column Heading | Data Type |

|---|---|

| Company | Text |

| Logo | Text |

| Website | Text |

| Description | Text |

| Employees | Number (integer) |

| Employees 6-Months Ago | Number (integer) |

| Revenue Range | Text |

| Website Uniques | Number (integer) |

| Mobile Downloads | Number (integer) |

| Funding Stage | Text |

| Total Funding | Number (integer) |

| City | Text |

| State | Text |

| Country | Text |

| Last updated | Update time |

The only column that you’ll manually enter data into is the first column, Company, which holds the name of the company. The rest will all be populated by the script automatically (except the “Last updated” column which Tables takes care of).

Google Tables Bot

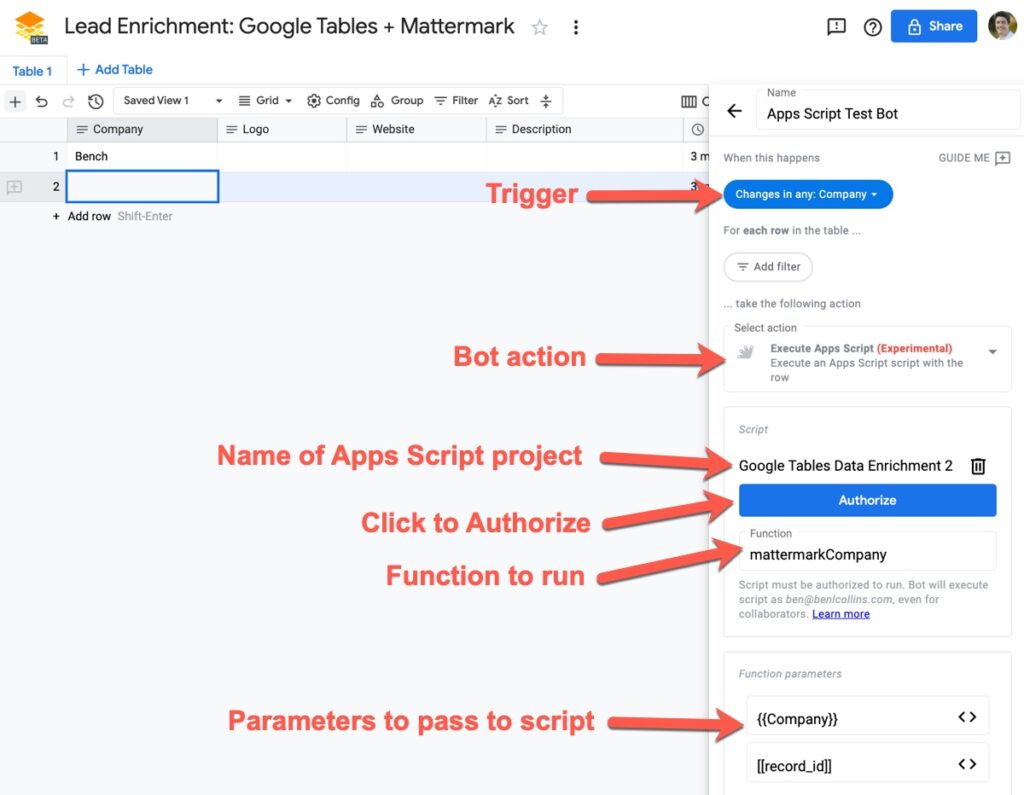

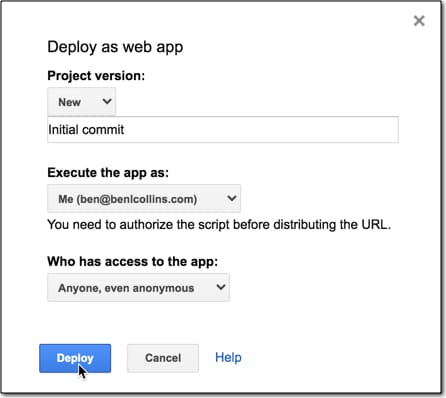

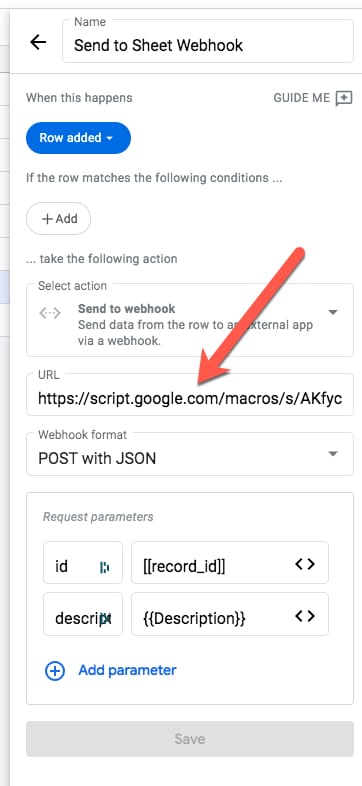

Next, create a bot with the following properties:

Trigger:Changes in any: Company

Action:Execute Apps Script

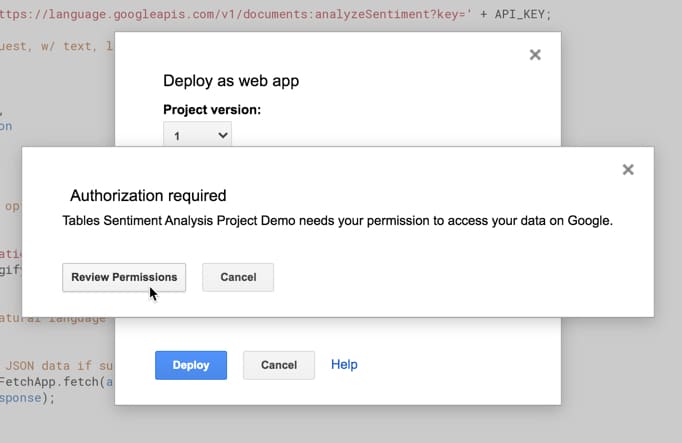

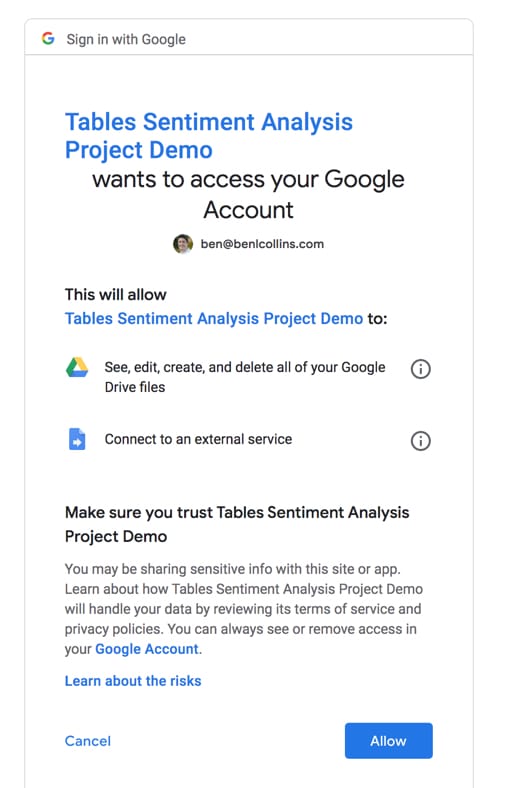

Then select the script file and function and authorize it (see below).

Function parameters:{{Company}} – so we can search for it on the Mattermark API[[record_id]] – the row ID for Google Tables so we can put the data back in the correct row

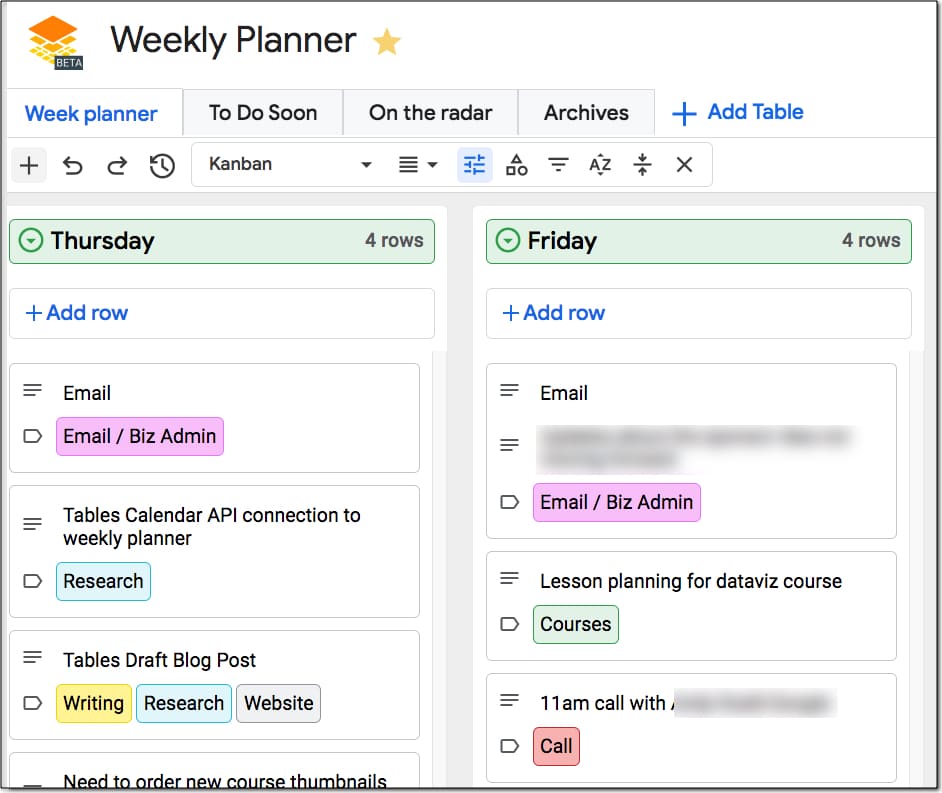

Here’s the bot setup in Google Tables (click to enlarge):

Apps Script file

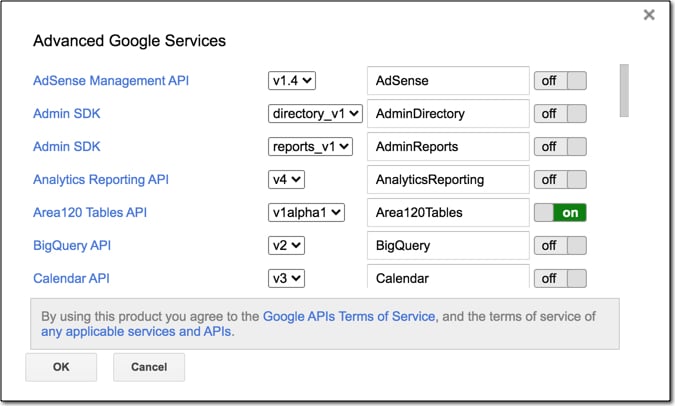

The Google Tables Advanced Service is required for this project, so that you can send data back into our Google Table.

Find it in under Services on the left sidebar, then search for Area120Tables. Add it to your project.

Once you’ve added this Service, you’ll notice your appsscript.json manifest file has been updated to include it as an enabled advanced service.

Next, add this script to the editor:

/**

* Global Variables

*/

const API_KEY = ''; // <-- enter your Mattermark API key here

const TABLE_NAME = ''; // <-- enter your Google Tables table ID here

/**

* function to retrive logo url

*/

function retrieveCompanyLogoURL(companyWebsite) {

// example

// companyWebsite = 'bench.co'

// setup the api

const base = 'https://s2.googleusercontent.com/s2/favicons?domain=';

const url = base + companyWebsite + '&sz=32';

return url;

}

/**

* function to retrieve company data from Mattermark

*/

function mattermarkCompany(companyName,recordID) {

// example

// companyName = 'facebook';

// set up the api

const base = 'https://api.mattermark.com/';

const endpoint = 'companies/'

const query = '?key=' + API_KEY + '&company_name=' + companyName;

const url = base + endpoint + query;

// call the api

const response = UrlFetchApp.fetch(url);

const data = JSON.parse(response.getContentText());

// parse the data

const companies = data.companies;

const firstCompany = companies[0];

const companyID = firstCompany.id;

console.log(companies);

console.log(firstCompany);

console.log(companyID);

// call the api to get specific company details

mattermarkCompanyDetails(companyID,recordID);

}

/**

* function to retrive company details

*/

function mattermarkCompanyDetails(companyID,recordID) {

// example

// companyID = '159108';

// set up the api

const base = 'https://api.mattermark.com/';

const endpoint = 'companies/' + companyID;

const query = '?key=' + API_KEY;

const url = base + endpoint + query;

// call the api

const response = UrlFetchApp.fetch(url);

const data = JSON.parse(response.getContentText());

console.log(response.getResponseCode());

//console.log(response.getContentText());

// parse data

const companyWebsite = data.website;

const companyDescription = data.description;

const companyEmployees = data.employees;

const companyEmployeesSixMonthsAgo = data.employees_6_months_ago;

const websiteUniques = data.website_uniques;

const mobileDownloads = data.mobile_downloads;

const fundingStage = data.stage;

const totalFunding = data.total_funding;

const city = data.city;

const state = data.state;

const country = data.country;

const revenueRange = data.revenue_range;

// add company logo data

const companyLogo = retrieveCompanyLogoURL(companyWebsite);

const enrichmentData = {

'Logo': companyLogo,

'Website': companyWebsite,

'Description': companyDescription,

'Employees': parseInt(companyEmployees),

'Employees 6-Months Ago': parseInt(companyEmployeesSixMonthsAgo),

'Revenue Range': revenueRange,

'Website Uniques': parseInt(websiteUniques) || 0,

'Mobile Downloads': parseInt(mobileDownloads) || 0,

'Funding Stage': fundingStage,

'Total Funding': parseInt(totalFunding),

'City': city,

'State': state,

'Country': country

};

console.log(enrichmentData);

// send data back to Google Tables

const rowName = 'tables/' + TABLE_NAME + '/rows/' + recordID;

Area120Tables.Tables.Rows.patch({values: enrichmentData}, rowName);

}

The code can be found here on GitHub.

Script Notes

It’s a rough first draft! I’ve noted some improvements below.

Line 26: The mattermarkCompany function calls the API with the Company name to retrieve the Company ID.

It then calls mattermarkCompanyDetails function and passes in the Company ID.

Line 42: You’ll notice that I chose the first item of the company data array, regardless of whether this is the correct company. This was a shortcut to test out this idea.

Although this will often work, it won’t always grab the correct data because there could be multiple companies with the same name and you actually want the second one in the list. For a more robust tool, this would need to be developed further (noted in the improvements section below).

Line 57: The script calls the API again (a different endpoint) with this ID to get the additional data.

Subsequently, the script parses the returned data and puts it into an object (line 91).

Line 110: This line is the PATCH method to add the new data back into the correct row of my Google Table.

Script Improvements

There are a number of improvements that could be made:

- Include error handling when no company data is found.

- Deal with the multiple company scenario discussed above.

- The Google Tables PATCH method could be moved into its own function, to further improve the code organization.

- Include more data specific to the scenario (Mattermark has way more than I used in this example).

Sadly, I’ve used up my trial quota on the Mattermark API. Unfortunately, they only have annual plans available at over $1k, so for now, I’m not going to develop this any further.

In Summary

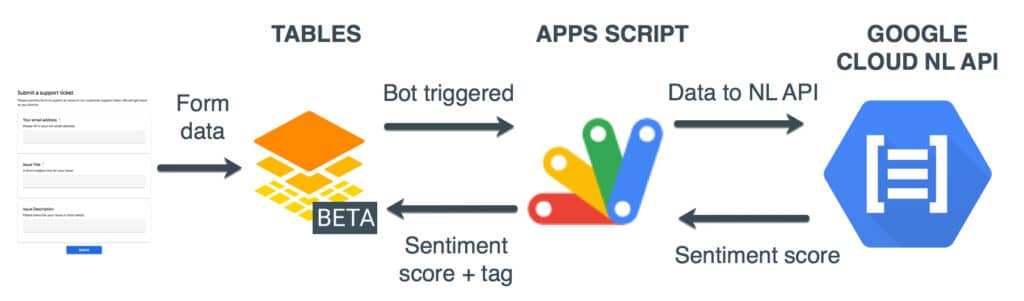

To sum up, the new Google Tables Apps Script bot is a great addition to Google Tables.

It opens up the door to all sorts of interesting automations with other Google Workspace tools or third-party services.

For example, suppose you have a Google Table of your employees and you add a new hire. This could trigger a bot that runs an Apps Script file to generate all the onboarding docs for that new employee, pre-filled with their data.

I’m excited to keep using it and see how else I can integrate Google Table automation into my workflows.